Post #2 focuses on how we handle our data and make it more accessible.

Modeling challenges and boilerplate

Building a model contains many common parts.

First is handling the input. Even after we sorted out the data with our Data Collection process, we still need to read it and split correctly to train-test and read data for our simulation process.

Another common part is evaluating the test metrics.

Running the model on the test data, and displaying different test metrics, such as MSE, AUC, and other metrics, to see how well the model performs.

Third, is checking the business metrics.

Before trying a model in production, we want to simulate how the model behaves regarding the business KPI’s.

We evaluate a number of metrics that serve as good proxies to the business performance.

Our goal in this framework is to make the life of the data scientists easier – letting them focus on the models rather than writing time consuming, boilerplate code.

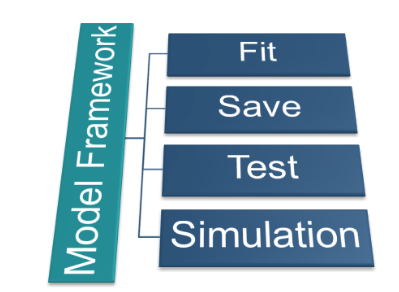

Model Framework

We wanted to create a framework that includes these parts out-of-the-box.

Runs the fitting process, saves the model, tests the performance and runs simulation.

All the data scientists should focus on, is their model’s logic.

They can use any Spark ML packages, open source implementations or their own in-house implementations; the rest they get “for free” from the framework.

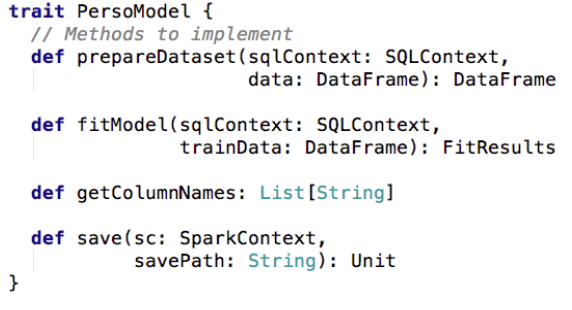

The interface they need to implement is simple:

- Preparing the dataset – extracting new features, transforming the data.

- Fitting the model on the data – the actual logic of the algorithm.

- Returning the column names (features) that are required for the model to operate

- Saving a representation of the model for later use

Models productization

The final part of the framework is to bridge between research and production.

We want this transition to be as simple and fast as possible, to allow us to reach conclusions quickly and keep improving.

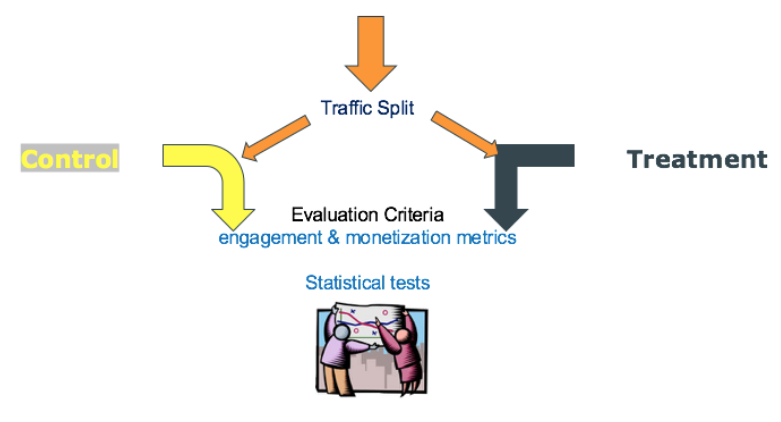

First, we want to allow fast and easy A/B testing of new models.

A quick reminder of how A/B tests work: we split the population into 2 independent groups of similar users.

We serve one group with the treatment – the new model and serve the other group with the control – our production baseline that the system currently uses.

After running for a while, we analyze the data, evaluate engagement & monetization metrics using statistical tests and conclude whether the treatment has managed to provide significant improvement.

To support this, we added a step at the end of the framework.

The step reads the model’s coefficients, and updates the A/B test configurations with a variant that will serve a small portion of our users with the new model.

In a similar fashion, once we have a model with proven value – we want to tune it on a regular basis so it will keep learning based on new data we collect.

- We run the whole modeling flow on a regular basis, triggered by our ETL engine.

A new model is created with updated variables. - Each run, we validate the business metrics, to make sure the model keeps performing well and doesn’t deteriorate.

- Finally, if the metrics were positive, we update the production configurations.

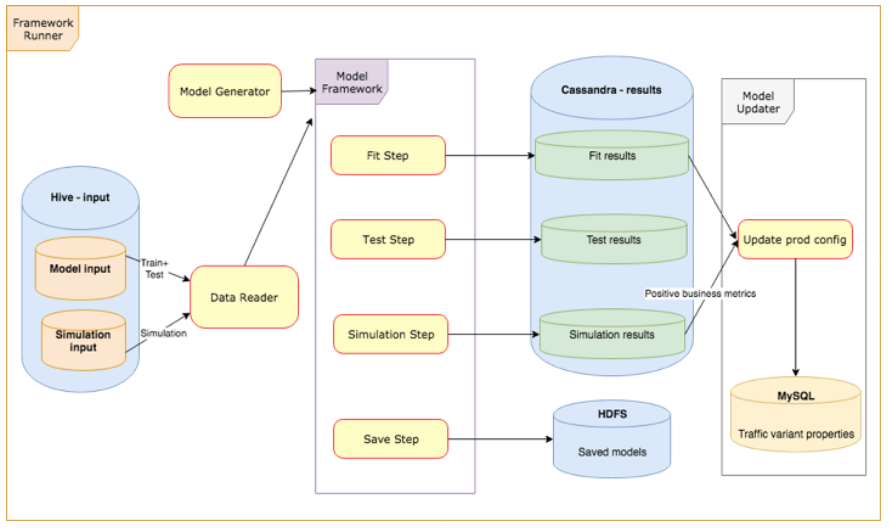

High-level design

Here is a quick high-level overview of this part of the system:

Our input data is stored on 2 hive tables, after being prepared by the Data Collection process.

The model is created using the model generator, that initializes the implementation based on the job’s configuration.

The framework then runs all the common parts:

- Reads the data and splits it into a test, train, and simulation

- Calls the model’s fit implementation and saves the result.

- Uses the model for test data predictions and stores the results for analysis.

- Runs the model on the simulation data and calculates the business KPI simulation metrics.

- Saves the model for later use on HDFS.

All the results are stored as on Cassandra.

The last part is the productization step:

It gets the updated model variables from the fit output, validates the simulation metrics to verify the model’s performance, and updates the production configurations on MySQL.

Takeaways

To sum up, here are the key lessons we learned that evolved to this framework:

- Prepare your data well – to enable high scale modeling, this is crucial!

I cannot over-emphasize how important this is, in order to avoid drowning in data and spending a lot of the research time with endless queries. - Build an effective research cycle – invest the time to build a good big data machine learning framework. It will really pay off in the long run and keep your data scientists productive and happier.

- Connect research and production – research results are worthless if it takes forever to apply them in your product. Shorter cycles will enable you to try out more models and implementations and keep improving.

Aim to make this as quick and easy as possible.